A/B Testing With Meta’s Tools

We’re always, unofficially, testing and learning within our social campaign strategies. Whether we’re looking at organic or paid content, as arts marketers, we are taking careful note when a particular post generates a lot of impact, whether that’s clicks and engagement or purchases and revenue.

Meta’s A/B Testing tool within Ads Manager lets us turn these casual, informal tests into more controlled ones–which in turns lets us build a bank of more significant learnings to power future strategic decisions! Using the formal A/B Testing structure ensures that nobody is exposed to both campaigns–the spend is split down the middle.

QUESTION: HOW MUCH CONTENT IS ENOUGH CONTENT?

We had a major symphony client who was in the habit of running a dozen or more campaigns on Meta at any given time. This meant an extraordinary content demand–they’d historically aimed to swap the posts in each campaign every 7 days. Curious as to whether less frequent creative updates would impact campaign results, we employed the A/B Testing tool.

Setup

Two identical campaigns testing just one variable. Version A swapped creative every 10 days; Version B swapped creative every 7 days. The campaign objective, targeting, and creative components were otherwise the same.

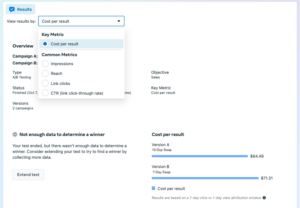

Results

Ultimately, the official testing tool determined that while the campaign with less frequent content swaps finished with a lower cost per result (in this case, purchases), the findings were not statistically significant.

However, we were able to get a more interesting story when looking at the holistic reporting for both of these campaigns. The campaign with longer-running ads had slightly more impressions, reach, and link clicks with the same spend. It also had a higher post engagement rate (contrary to what we may have hypothesized, given that audiences would have seen the same handful of ads more frequently, rather than a larger variety of ads).

Moreover, the average order value for purchases attributed to the 10-day swap campaign was FAR higher that of the purchases attributed to the 7-day swap campaign, despite higher Add To Cart numbers for the 7-day swap campaign. All in all, this indicated to our team that the quality and commitment of buyers seeing the same ads for 10 days was higher.

Strategy impact

The team didn’t change the whole posting strategy overnight! But these findings reduced the stress around weekly content swaps, and certain long-running or evergreen campaigns will now use a 10-day schedule.

QUESTION: HOW DOES MANUAL SEGMENTATION COMPARE TO MACHINE LEARNING?

In a separate A/B Testing scenario, we were working with a client who came from an e-commerce background and had a long history of maximizing the use of Meta’s automated targeting (powered by machine learning). We wanted to understand how results would compare against the more highly segmented targeting that we tend to favor.

Setup

Again, two identical campaigns testing just one variable. Version A (S, or “Segmented”) had each bottom-, middle-, and top-of-funnel audience (including Advantage+) in its own segment. Version B (NS, or “Non-Segmented”) had just two targeting segments: one built from Meta’s automated Advantage+ audience and one that contained all bottom- and middle-of-funnel audiences (Emails, Retargeting, Lookalikes, etc.).

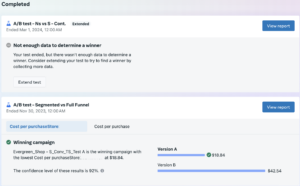

Results

In the campaign’s first 8 weeks, the Segmented (“traditional”) campaign was the clear winner. When the test was extended, however, the results became muddier, and Meta could not determine a winner.

Once again, we were able to get more context in looking at month over month, overall campaign results in our more holistic reporting. We learned that the non-segmented campaign (more automated, more reliant on Meta’s machine learning) started to outperform the segmented campaign after about two months running. This indicated to our team that while the machine learning capabilities of the platform are powerful, they take time (rather a lot of time!) to ramp up and gather enough data to perform well.

Strategy impact

Use of automated targeting strategies for long-running or evergreen campaigns, but continuing to lean on strategically segmented campaigns when the campaign has an 8 week (or less) flight. In general, a campaign will optimize better with more data and signals, so this could mean a longer flight OR other factors like a larger budget.

TAKEAWAYS

We aren’t telling you all of this to give you insights into how frequently you should swap your campaign creative, or how or whether you should rely on Meta’s automated Advantage+ audiences.

Rather, we’d encourage you to strategically consider which elements of your campaign or creative strategies you want to test, and use a holistic reporting method to supplement the data that Meta’s tool provides. The enormous advantage to using the A/B Testing tool is in the campaign structure itself: ensuring that nobody is exposed to both campaigns, so the findings are cleaner and more significant.

Keep in mind:

A test should run for at least 30 days

More budget will mean more likelihood of statistically significant results

Your campaign audience will be split along with the budget–in choosing to run a formal A/B Test, you aren’t reaching fewer people by subdividing your spend.

You can always review the Best Practices directly from Meta to ensure successful tests!

WE’RE HERE TO HELP!

Get in touch with our Digital Advertising team to optimize your campaign strategy!